Unleashing the Power of ChatGPT and Prompts in Node.js using Langchain

Integrate LLM like GPT-3 in javascript and get output in JSON

Introduction

Chatbots have been an integral part of modern communication and customer service systems. With the advent of AI and NLP, chatbots have become more intelligent and efficient in handling customer queries and providing personalized responses. One such powerful tool is ChatGPT, a large language model trained by OpenAI based on the GPT-3.5 architecture.

LangChain is a JavaScript library that makes using large language models (LLMs) in Node.js applications easy. It provides a high-level API that abstracts away the details of interacting with LLMs, so you can focus on building your application. It supports a variety of LLMs, including OpenAI’s GPT-3, Google’s LaMDA, and Hugging Face’s Transformers. It also supports a variety of features, such as prompt engineering, chaining, and multi-modal input.

In this article, we will explore how to integrate ChatGPT in a Node.js application using LangChain to get a response in JSON format.

Prerequisites

To integrate ChatGPT in a Node.js application using LangChain, you need to have the following:

A basic understanding of Node.js and JavaScript

OpenAI API key

Node.js 18+ installed on your computer

Getting Started

The first step is to create a new Node.js project. You can do this using the following command:

npm init -y

Once the project is created, install the Langchain library:

npm install langchain

Install Zod schema npm package: It will help in creating JSON schema for getting structured output from the OpenAI model.

npm install zod

Create OpenAI Client

import { ChatOpenAI } from "langchain/chat_models/openai";

function getOpenaiClient() {

const chat = new ChatOpenAI({

openAIApiKey: process.env.OPENAI_API_KEY,

modelName: "gpt-3.5-turbo"

});

return chat;

}

This function creates an instance of the ChatOpenAI client, a wrapper for the OpenAI API. It takes the OpenAI API key and the name of the model to use as parameters and returns the client instance. You can get your API key here.

Creating Structured Prompts using Zod Schema

Prompts are the most important component in generating the desired output from a Large Language Model like GPT-3.

Writing effective prompts is a new trending skill in the tech industry. I have written a complete article for writing effective prompts for these LLM models here. Please check it out before jumping to the next step.

Let’s start with a real-world use case of working with Prompts.

Let’s assume we want to get a list of different states and union territories of India along with the Top 5 districts of that state or UT. Here is a prompt that will be passed to the Model.

promptText:

You are a Geography Expert and you have a great knowledge of Map of India.

Your task: Generate a JSON data which contains a list of 10 states and 5 union territories of India.

Add the List of top 5 districts of that state or UT based on size of population of the district

sorted in descending order.

Creating a JSON Output Schema using Zod

Here we need to define the JSON schema that we are expecting as output using the Zod schema package.

You can also find many different data types supported by Zod here.

import { z } from "zod";

// create zod schema

const zodSchema = z.object({

states: z.array(

z.object({

"stateName": z.string().describe("Name of states in India"),

"districtList": z.array(

z.string().describe("List of top 5 district in this state")

)

})

),

unionTerritories: z.array(

z.object({

"UTName": z.string().describe("Name of Each Union Territories in India"),

"districtList": z.array(

z.string().describe("List of top 5 district in this territory").optional()

)

})

)

})

The schema defines the structure and types of data that should be present in the JSON object.

It consists of two arrays: states and unionTerritories, each containing objects with two properties: stateName or UTName, which should be strings, and districtList, which should be an array of up to five strings representing the top five districts in the state or union territory.

The describe method is used to provide a description of the property in the schema. The optional method is used to indicate that the districtList property is optional, which means if the model is not able to generate it, then keep it blank.

Creating output Parser

When we generate any kind of output from ChatGPT, It returns a response in Text format, so we need a Structured Output Parser to parse the output generated by the model.

import { StructuredOutputParser } from "langchain/output_parsers";

import { PromptTemplate } from "langchain/prompts";

async function generatePromptAndParser (zodSchema: any, promptText: string): Promise<[string, any]> {

// Create output Parser

const parser = StructuredOutputParser.fromZodSchema(zodSchema);

// fromatting the zod schema instructions int string

const formatInstructions = parser.getFormatInstructions();

//creating the prompt using prompt template helper from Langchain

const prompt = new PromptTemplate({

template: "{promptText}\n{formatInstructions}",

inputVariables: ["promptText"],

partialVariables: { formatInstructions }

});

// Final prompt after formatting all the instructions into a String.

// This final prompt will be given to the model

const finalPrompt = await prompt.format({

promptText

});

return [finalPrompt, parser]

}

Creating JSON Output Fixing Parser

It is used to detect any kind of defect in the generated output after parsing the output. It finds irregularities in the response format and tries to fix the defect.

/**

Parses the output using the given parser and returns the parsed output.

@param parser - The parser to be used for parsing the output.

@param output - The output string to be parsed.

@param chatClient - The openai chat client object to be used for fixing the output.

@returns A Promise that resolves to the parsed output or the fixed output.

*/

async function parseOutput (parser: any, output: string, chatClient: any): Promise<any> {

try {

// parse the output using the parser

const parsedOutput = await parser.parse(output);

return parsedOutput;

} catch (error) {

// If there is any error during parsing, try to fix it using OutputFixingParser

console.error(error);

const fixParser = OutputFixingParser.fromLLM(chatClient, parser);

const newOutput = await fixParser.parse(output);

return newOutput;

}

}

Generating the JSON output using the GPT-3.5 model

Now, let’s call the open AI model to generate the output.

async function generateJsonOutput(): Promise<Object> {

// write the prompt

const promptText = `You are a Geography Expert and you have a great knowledge of Map of India.

Your task: Generate a JSON data which contains a list of 10 states and 5 union territories of India.

Add the List of top 5 districts of that state or UT based on size of population of the district sorted in descending order.`

// create zod schema

const zodSchema = z.object({

states: z.array(

z.object({

"stateName": z.string().describe("Name of states in India"),

"districtList": z.array(

z.string().describe("List of top 5 district in this state")

)

})

),

unionTerritories: z.array(

z.object({

"UTName": z.string().describe("Name of Each Union Territories in India"),

"districtList": z.array(

z.string().describe("List of top 5 district in this territory")

)

})

)

})

// Get the GPT model to get output

const chat = openaiClient(); // function defined above

const [finalPrompt, parser] = await generatePromptAndParser(promptText, zodSchema);

const chatResponse = await chat.call([new SystemChatMessage(finalPrompt)]);

// parse the output using above function

const parsedOutput = await parseOutput(parser, chatResponse.text, chat)

return parsedOutput;

}

In the above function, the prompt and zod schema is defined and then it calls the openaiClient function to get a GPT model to generate the output. It generates a prompt and parser for the GPT model using the generatePromptAndParser function. It then sends the prompt to the GPT model and gets a response back in the chatResponse variable.

The function uses the parseOutput function to parse the response from the GPT model using the parser created earlier. If there is an error during parsing, it tries to fix it using the OutputFixingParser class. Finally, the function returns the parsed output as an Object.

Now call the above function to get the final output.

async function callFunc() {

const data = await generateJsonOutput();

return data

}

callFunc()

Note: All the code written here is in typescript, you can also convert it in Javascript simply.

Complete Code

import { ChatOpenAI } from "langchain/chat_models/openai";

import { SystemChatMessage } from "langchain/schema";

import { OutputFixingParser, StructuredOutputParser } from "langchain/output_parsers";

import { z } from "zod";

import { PromptTemplate } from "langchain/prompts";

function openaiClient() {

const chat = new ChatOpenAI({

openAIApiKey: process.env.OPENAI_API_KEY,

modelName: "gpt-3.5-turbo"

});

return chat;

}

// use output parser and Output fixing parser

async function parseOutput (parser: any, output: string, chatClient: any): Promise<any> {

try {

const parsedOutput = await parser.parse(output);

return parsedOutput;

} catch (error) {

console.error(error);

const fixParser = OutputFixingParser.fromLLM(chatClient, parser);

const newOutput = await fixParser.parse(output);

return newOutput;

}

}

async function generatePromptAndParser (promptText: string, zodSchema: any): Promise<[string, any]> {

// Create output Parser

const parser = StructuredOutputParser.fromZodSchema(zodSchema);

const formatInstructions = parser.getFormatInstructions();

const prompt = new PromptTemplate({

template: "{promptText}\n{formatInstructions}",

inputVariables: ["promptText"],

partialVariables: { formatInstructions }

});

const finalPrompt = await prompt.format({

promptText

});

return [finalPrompt, parser]

}

async function generateJsonOutput(): Promise<Object> {

const promptText = `You are a Geography Expert and you have a great knowledge of Map of India.

Your task: Generate a JSON data which contains a list of 10 states and 5 union territories of India.

Add the List of top 5 districts of that state or UT based on size of population of the district sorted in descending order.`

// create zod schema

const zodSchema = z.object({

states: z.array(

z.object({

"stateName": z.string().describe("Name of states in India"),

"districtList": z.array(

z.string().describe("List of top 5 district in this state")

)

})

),

unionTerritories: z.array(

z.object({

"UTName": z.string().describe("Name of Each Union Territories in India"),

"districtList": z.array(

z.string().describe("List of top 5 district in this territory")

)

})

)

})

// Call the GPT model to get output

const chat = openaiClient();

const [finalPrompt, parser] = await generatePromptAndParser(promptText, zodSchema);

const chatResponse = await chat.call([new SystemChatMessage(finalPrompt)]);

// parse the output using above function

const parsedOutput = await parseOutput(parser, chatResponse.text, chat)

console.log(parsedOutput);

return parsedOutput;

}

async function callFunc() {

const data = await generateJsonOutput();

return data

}

callFunc();

Running the code

tsc chatgpt.ts

node chatgpt.js

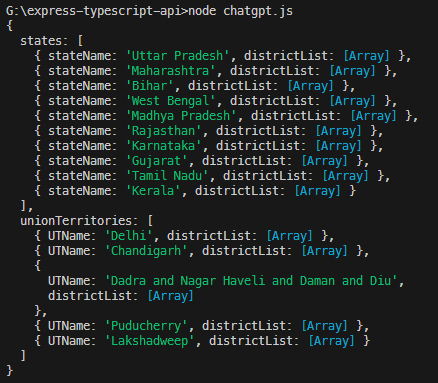

Output Generated

Generated from the OpenAI model as output for the code mentioned in this story

Conclusion

Integrating ChatGPT in a Node.js application using LangChain is a simple and efficient way to leverage the power of AI and NLP in your chatbots. By following the steps outlined in this article, you can easily integrate ChatGPT into your Node.js application. With ChatGPT, you can build smarter chatbots that can provide personalized responses to your customers.